Idea

Instead of a walking cane that blind people use to feel their way around, there should instead be a laser cane that measures distance to stuff and then informs the user instead.

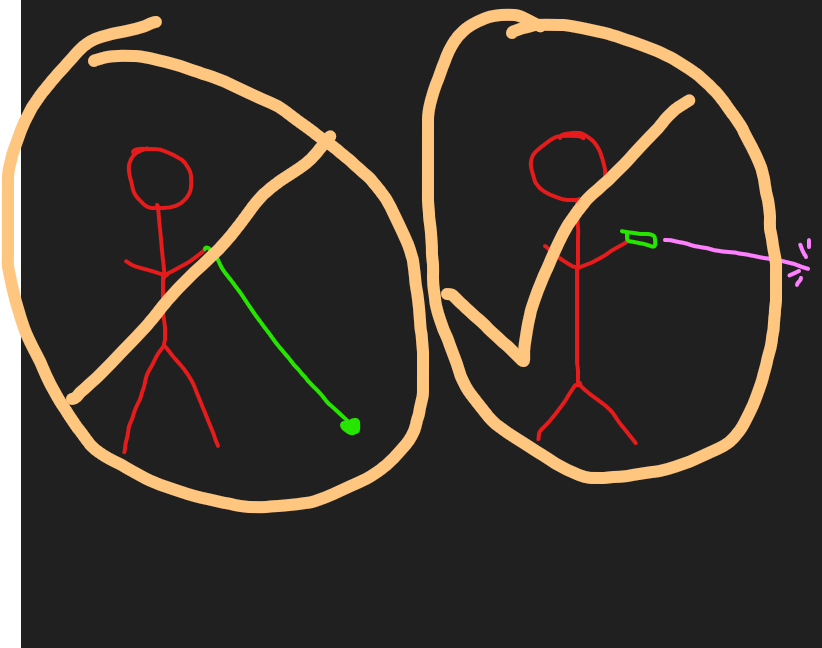

The lidar would have the same form factor as a regular torch, only it would be a lidar instead. Distance measurements could be fed back to the user by vibration or maybe audibly, perhaps with the kind of audio modulation that metal detectors use. Then the user could use the lidar by waving it around rapidly to scan where potential obstacles might be. I think this manual scanning creates the opportunity for the meat based neural net to do some good learning and come up with a proper map of the surroundings. It’s pretty trivial to create a lidar with a range of 20m, and that would already be more than enough for a laser cane.

Implementation

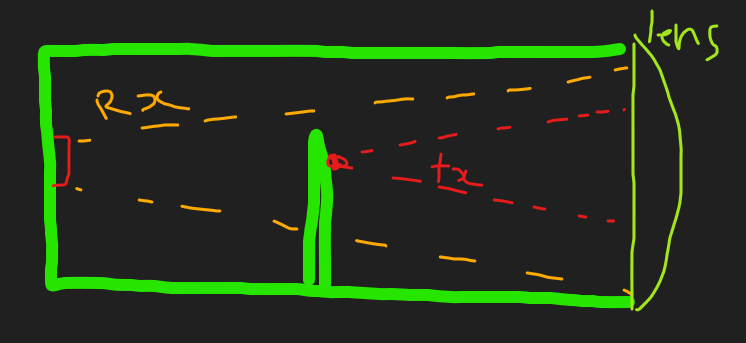

Given the pretty low performance requirements I think a coaxial design with a regular ol 905nm laser diode and silicon APD would work fine. Something like this: Side view:

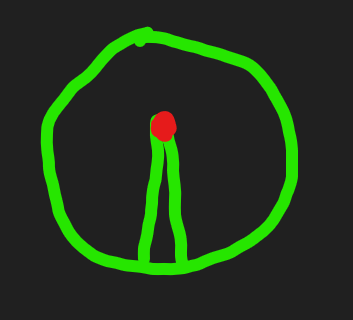

Front view:

Then a regular ol pulse detector of some kind could be used instead of a proper ADC.

Problems

One of the main problems here is going to be close range detection. I’m not sure how to get around this one. Perhaps some kind of baffling can be made between the tx and the rx to get the crosstalk down to an acceptable level. Failing that maybe a second tx lens, although that’s quite undesirable from a complexity/size/alignment/general terribleness POV. I don’t think it would be a good idea to go to an ADC and try and subtract out the waveform. That would require expensive electronics that would prolly consume 1W+ and make it infeasible.