Idea

As mentioned before because the lidar does not have any notion of pose it makes it difficult to detect the difference between:

- A spike in distance that is due to the lidar scanning over an obstacle (side view):

- The lidar scanning over a different point in the floor by pitching up with the lidar (top view).

One of these is obviously a problem, and the other isn’t. If we knew the pose of the lidar then it would be easy to do this by projecting the points into the world frame.

Orientation integration

We can use an IMU to do this though (kind of). I have a MPU-9250 which is a 9-DOF sensor. As a quick test over time, let’s try integrating just the orientation of the sensor and rotating the sensor about a single point to scan a nice box.

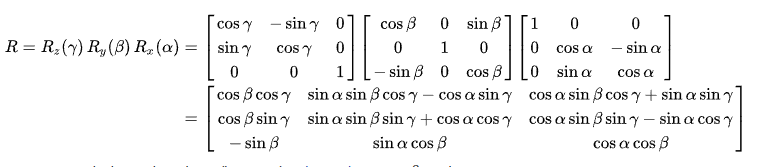

I took some random rotation matrix thing from wikipedia:

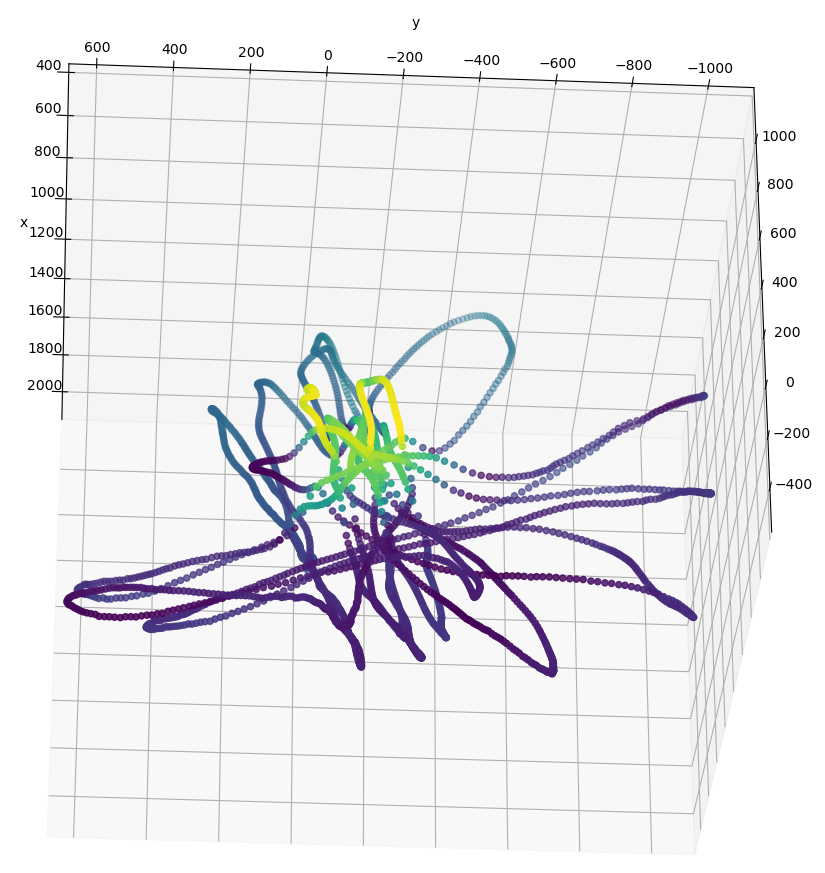

and plugged it into the measurements I had made alongside the lidar, and voila:

and plugged it into the measurements I had made alongside the lidar, and voila:

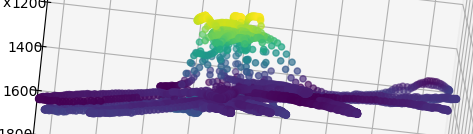

A point cloud of a box:

A point cloud of a box:

Looks pretty excellent to me!

Looks pretty excellent to me!

Flaw

Although I think that this is a good extra source of information that could be used to do stuff like fit the last 500ms of data to a plane and calculate the deviations from that plane it seems to me like it would suffer from the problem of constant velocity. Since a person is already travelling at constant velocity the imu can’t really measure that, and so I think that this would cause problems. As an aside I wondered whether or not it was possible to use the earths magnetic field to sense ones velocity in the same way that it is possible that you can use it to sense your orientation. You would think that waving a conductor around would cause a current to be induced but not so apparently! You need the field to be changing. That’s why this isn’t already a thing.